Question 4/13 fast.ai v3 lecture 12

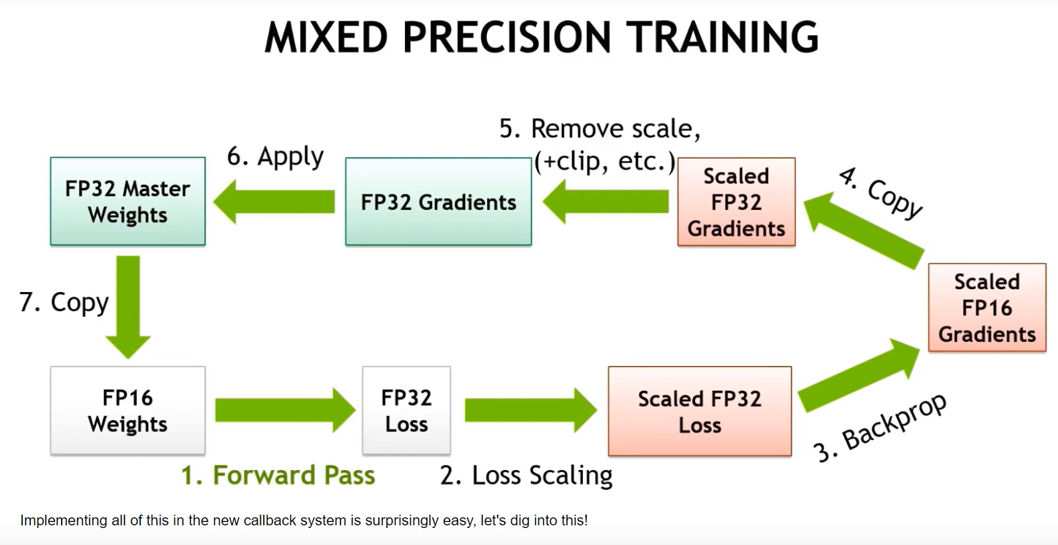

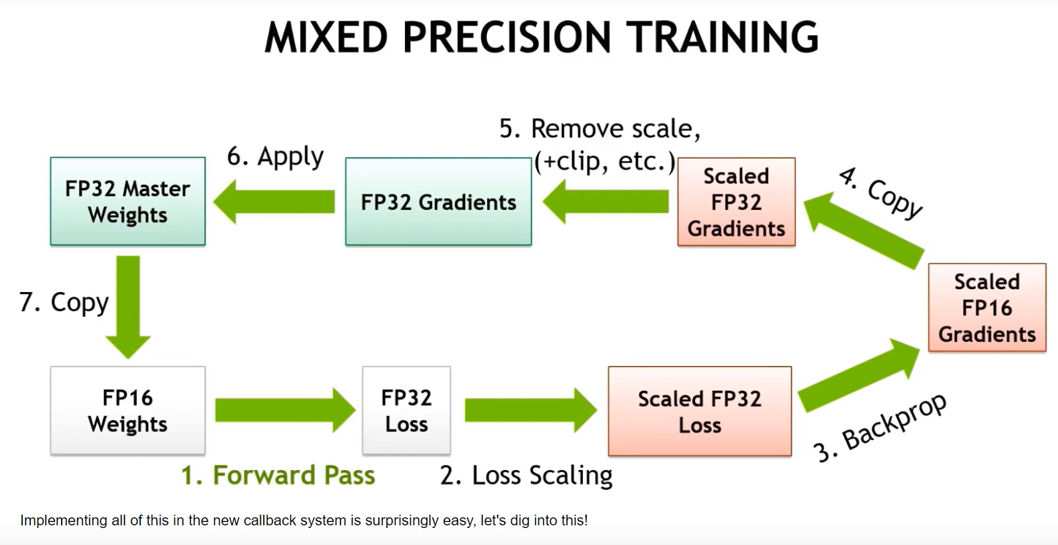

Training using half-precision floating point (fp16) can be up to 3x faster. When training with fp16, are all calculations done using half-precision floats?

Answer

Relevant part of lecture

supplementary material

Later in the lecture: Fp16 sometime trains a little bit better than fp32 - maybe it has some regularazing effect? Generally, the results are very close together